No Virtualization Tax for MLPerf Inference v3.0 Using NVIDIA Hopper and Ampere vGPUs and NVIDIA AI Software with vSphere 8.0.1 - VROOM! Performance Blog

Por um escritor misterioso

Descrição

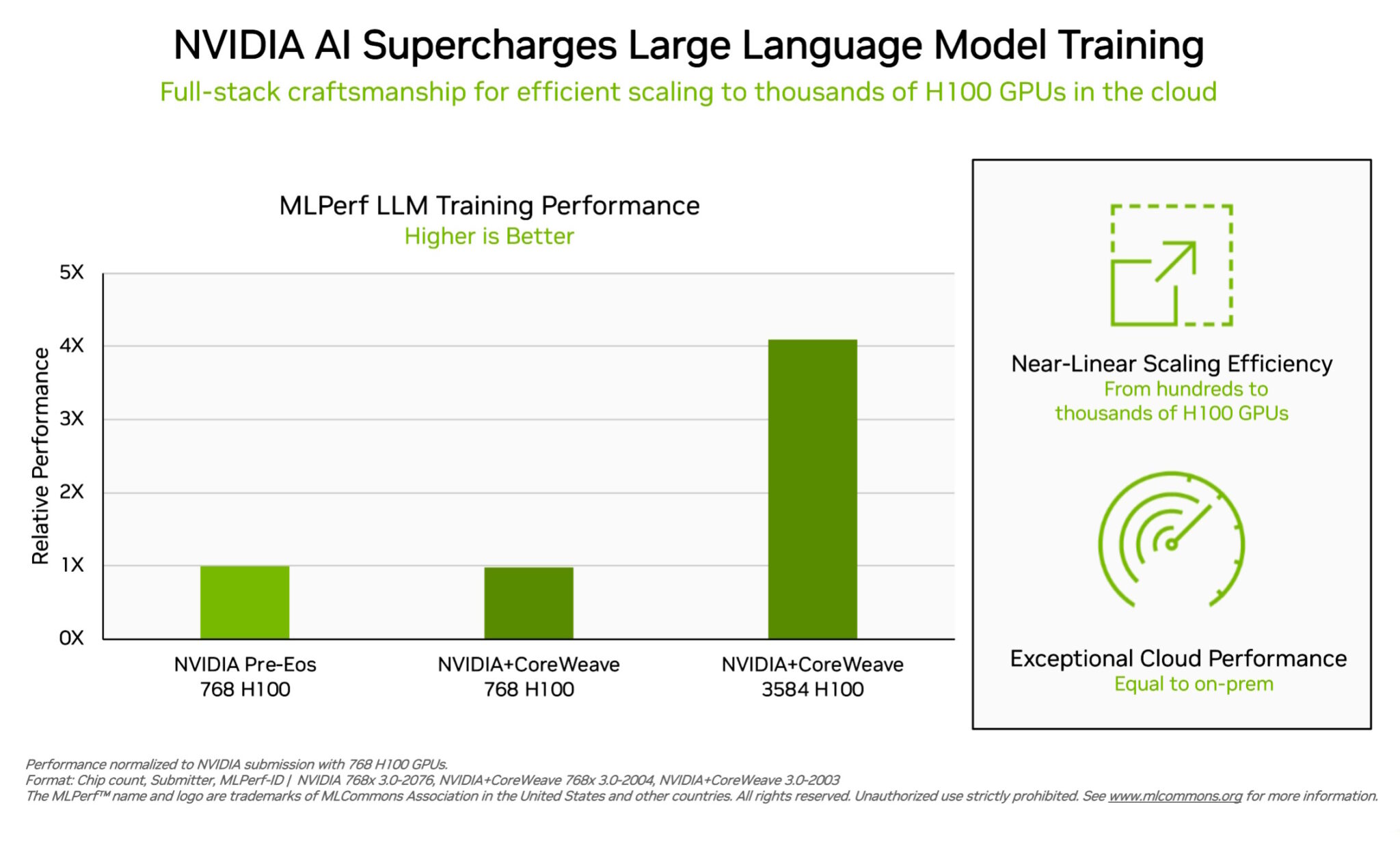

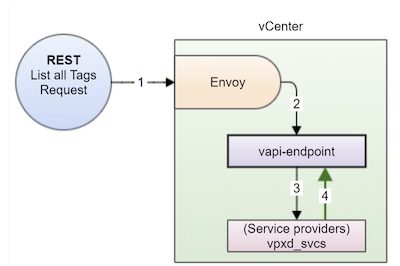

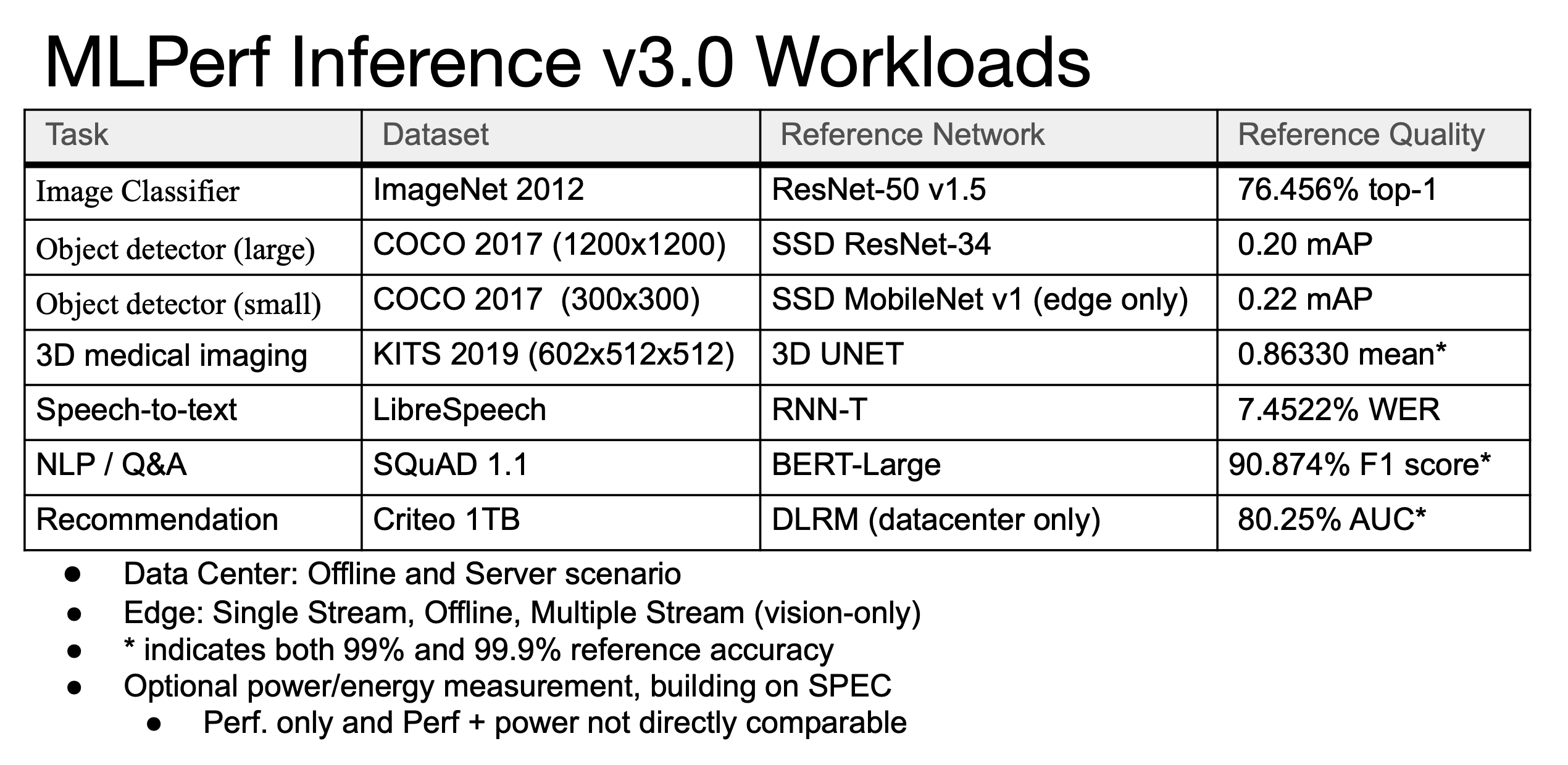

In this blog, we show the MLPerf Inference v3.0 test results for the VMware vSphere virtualization platform with NVIDIA H100 and A100-based vGPUs. Our tests show that when NVIDIA vGPUs are used in vSphere, the workload performance is the same as or better than it is when run on a bare metal system.

virtualization Archives - VROOM! Performance Blog

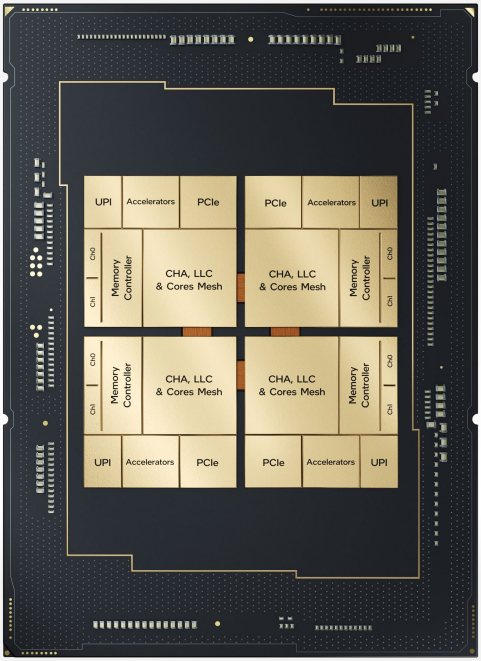

NVIDIA Grace Hopper Superchip Dominates MLPerf Inference Benchmarks

MLPerf Inference 3.0 Highlights - Nvidia, Intel, Qualcomm and…ChatGPT

Release Notes - NVIDIA Docs

MLPerf Inference 3.0 Highlights - Nvidia, Intel, Qualcomm and…ChatGPT

Setting New Records in MLPerf Inference v3.0 with Full-Stack Optimizations for AI

NVIDIA Posts Big AI Numbers In MLPerf Inference v3.1 Benchmarks With Hopper H100, GH200 Superchips & L4 GPUs

Leading MLPerf Inference v3.1 Results with NVIDIA GH200 Grace Hopper Superchip Debut

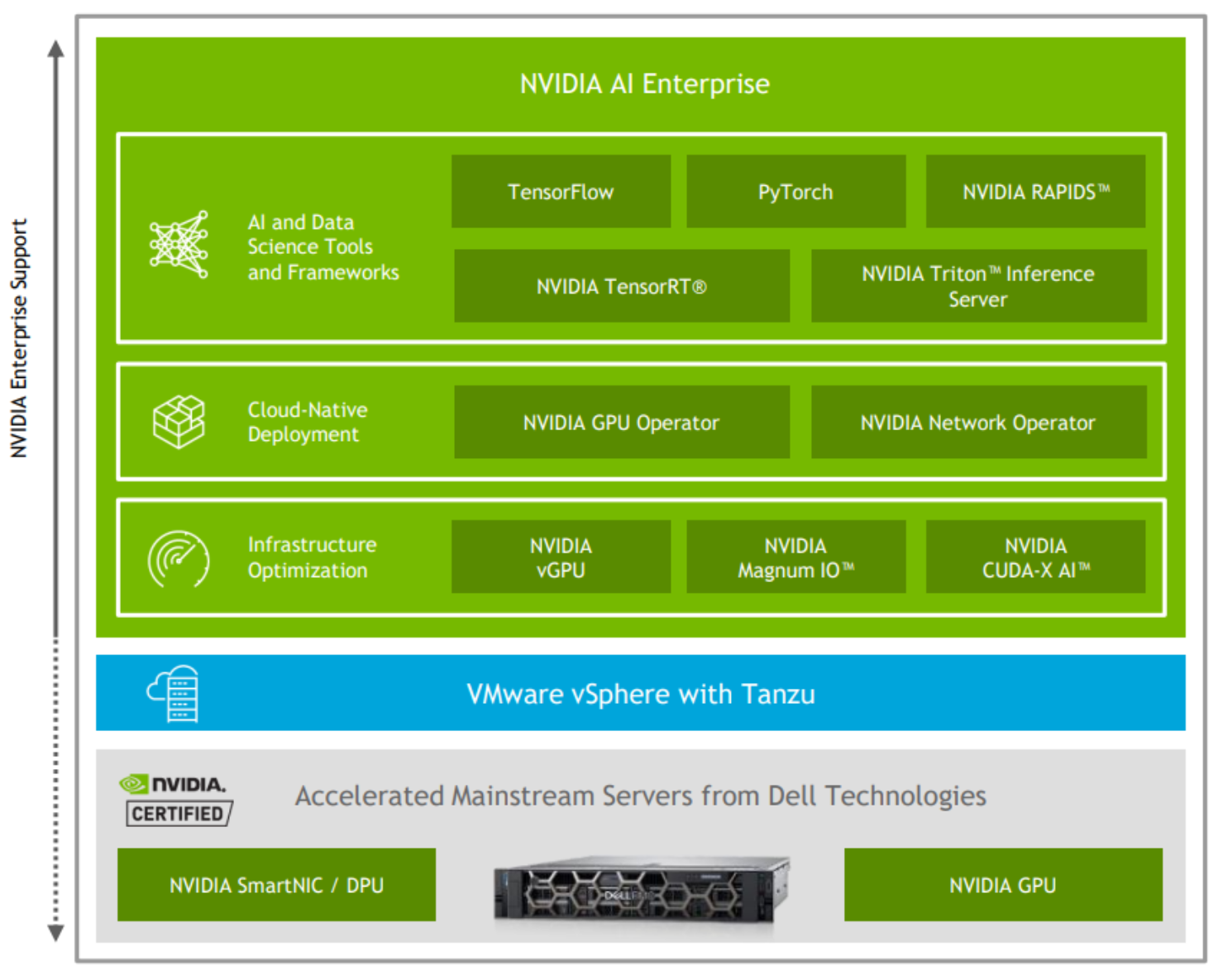

NVIDIA, White Paper - Virtualizing GPUs for AI with VMware and NVIDIA Based on Dell Infrastructure

Leading MLPerf Inference v3.1 Results with NVIDIA GH200 Grace Hopper Superchip Debut

de

por adulto (o preço varia de acordo com o tamanho do grupo)