Six Dimensions of Operational Adequacy in AGI Projects — LessWrong

Por um escritor misterioso

Descrição

Editor's note: The following is a lightly edited copy of a document written by Eliezer Yudkowsky in November 2017. Since this is a snapshot of Eliez…

Editor's note: The following is a lightly edited copy of a document written by Eliezer Yudkowsky in November 2017. Since this is a snapshot of Eliez…

Editor's note: The following is a lightly edited copy of a document written by Eliezer Yudkowsky in November 2017. Since this is a snapshot of Eliez…

What does it take to defend the world against out-of-control AGIs

Review Voting — AI Alignment Forum

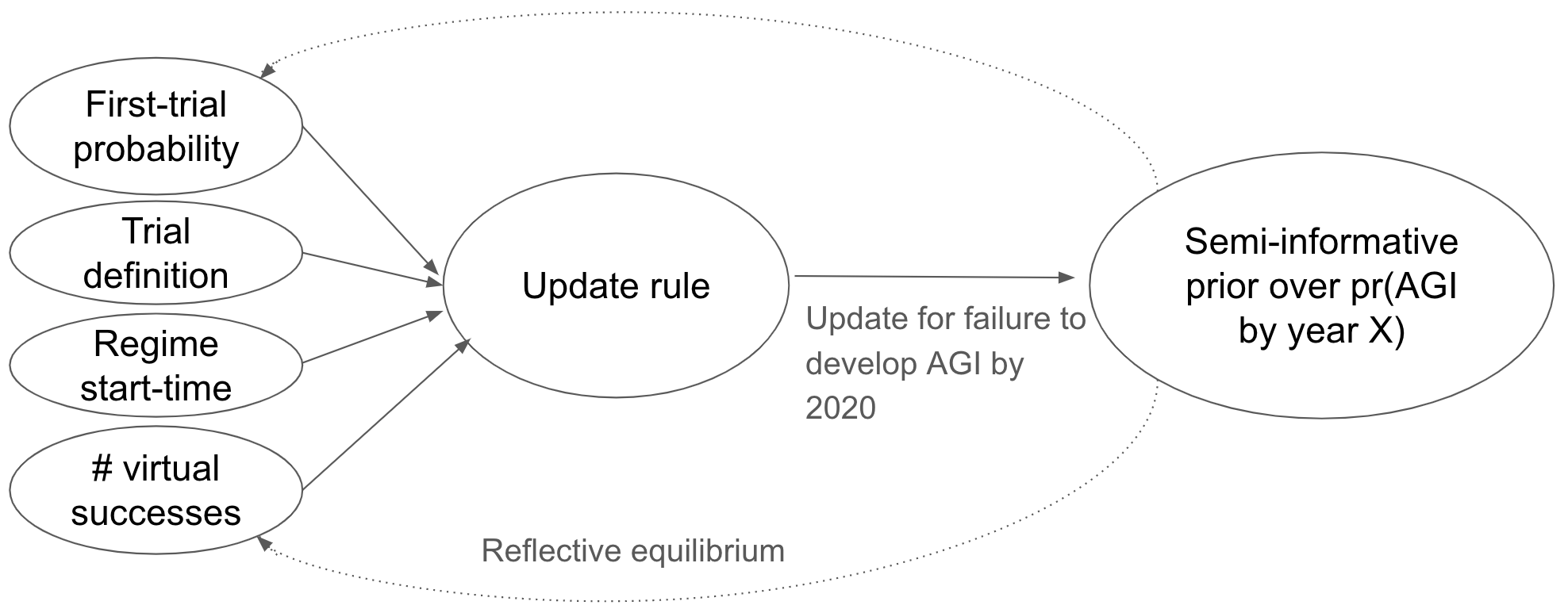

Semi-informative priors over AI timelines

The Wizard of Oz Problem: How incentives and narratives can skew

OpenAI, DeepMind, Anthropic, etc. should shut down. — LessWrong

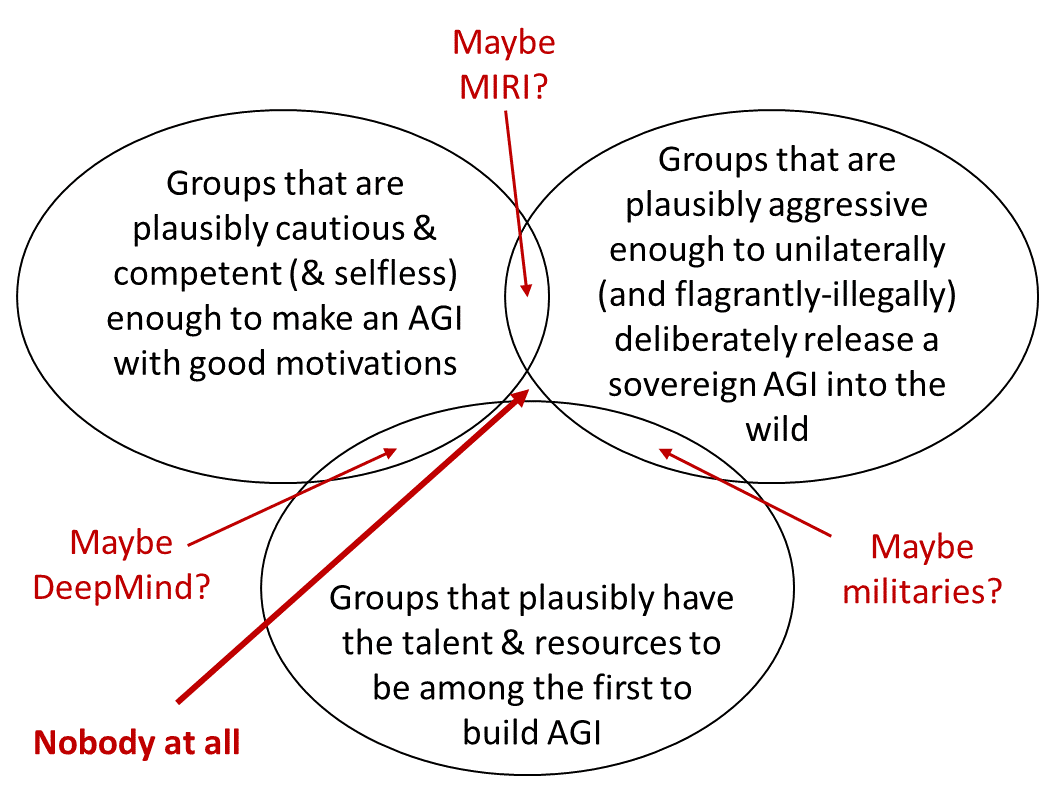

Without specific countermeasures, the easiest path to

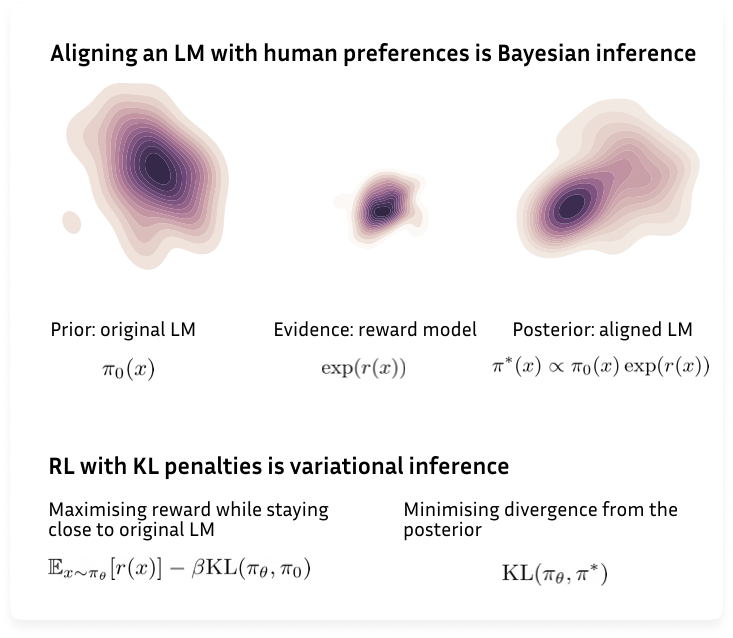

My thoughts on OpenAI's alignment plan — LessWrong

25 of Eliezer Yudkowsky Podcasts Interviews

AGI Ruin: A List of Lethalities — AI Alignment Forum

PDF) Concepts in Advanced AI Governance: A Literature Review of

Lesswrong Seq PDF, PDF, Rationality

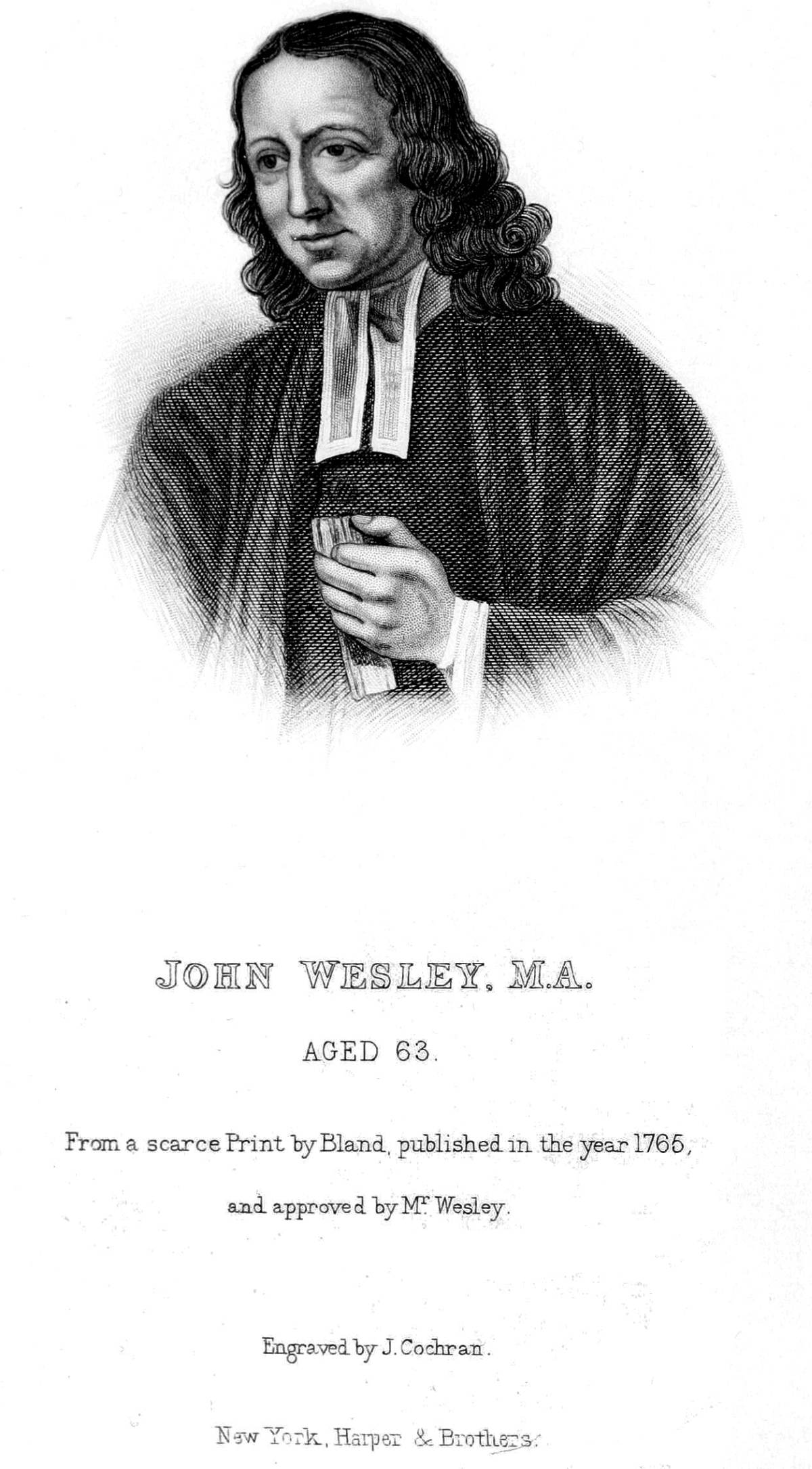

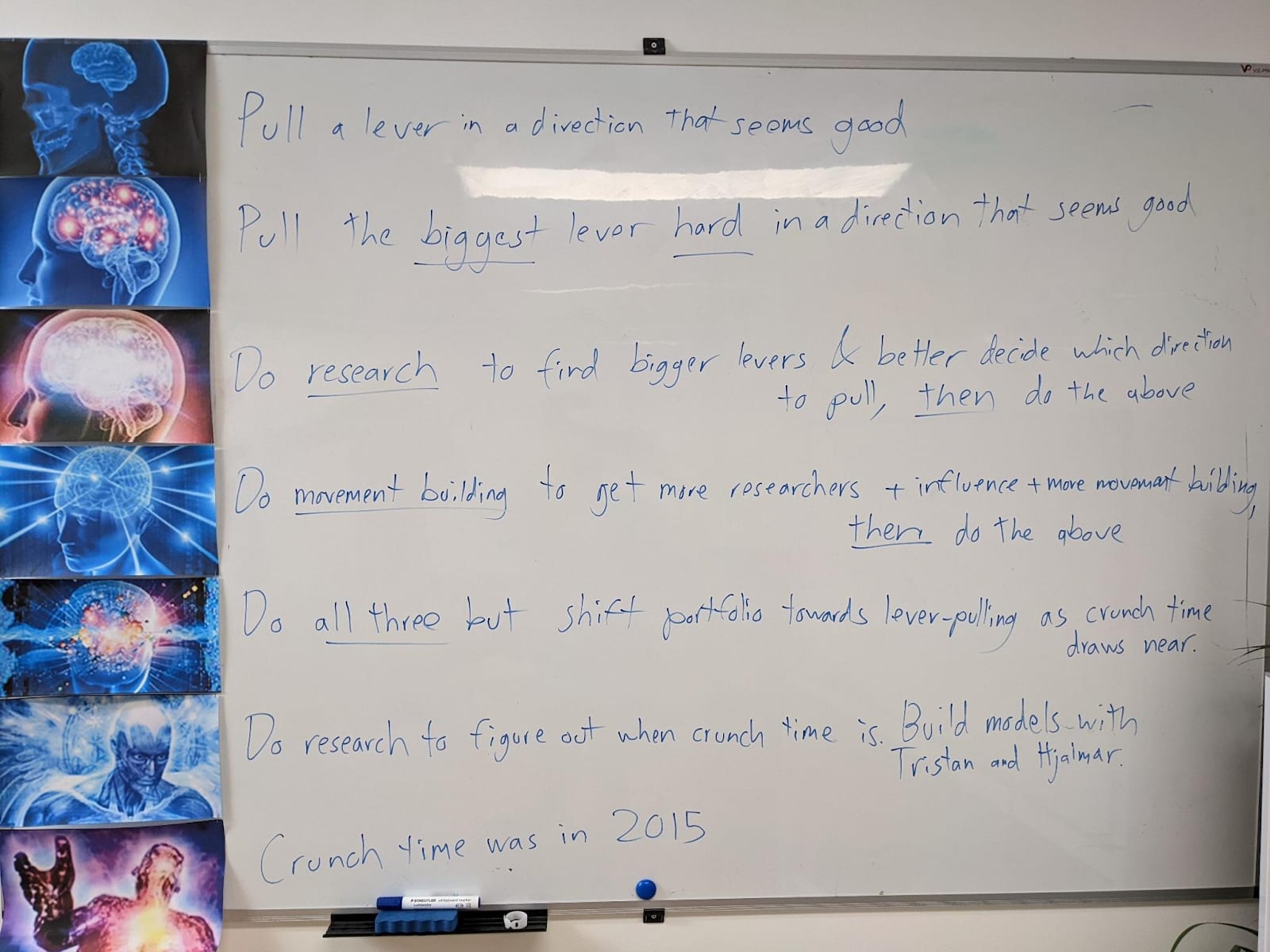

Six Dimensions of Operational Adequacy in AGI Projects (Eliezer

de

por adulto (o preço varia de acordo com o tamanho do grupo)